Exploring the architecture of intelligent systems — from model design and optimization to the mechanics of inference at scale.

AI engineering encompasses the lifecycle surrounding those models — the infrastructure, monitoring, and iterative controls that keep learning systems dependable and scalable in production.

Principal Component Analysis (PCA) is a widely used method for dimensionality reduction in data science and machine learning. It transforms interdependent variables into fewer independent dimensions, making computation more efficient and revealing the dominant structure in complex datasets. PCA provides both mathematical clarity and practical value, helping to work effectively with high-dimensional data.

Principal Component Analysis (PCA) is a widely used method for dimensionality reduction in data science and machine learning. It transforms interdependent variables into fewer independent dimensions, making computation more efficient and revealing the dominant structure in complex datasets. PCA provides both mathematical clarity and practical value, helping to work effectively with high-dimensional data.

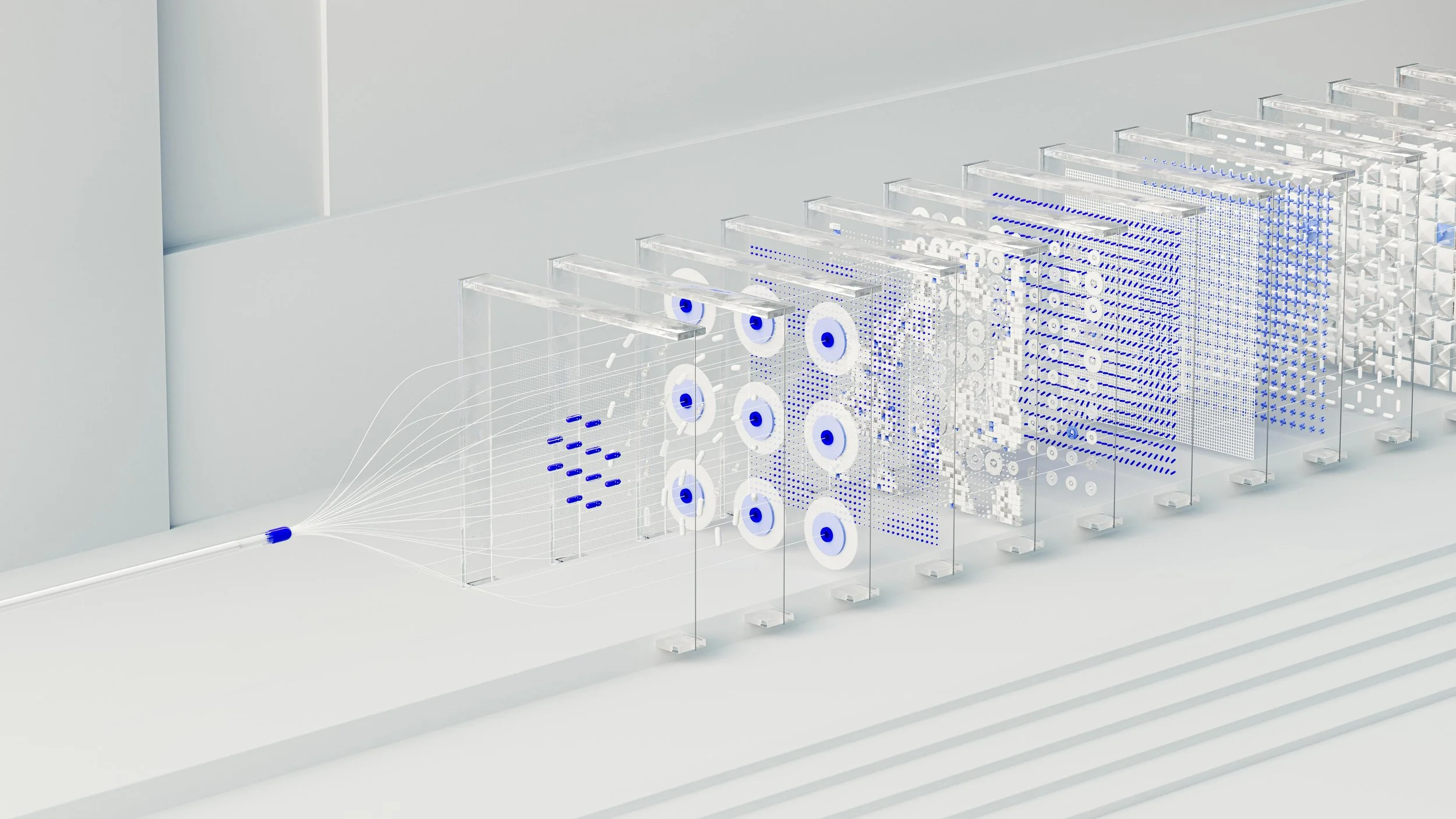

Convolutional Neural Networks process data with grid structures such as images by applying convolutional filters, pooling layers, and normalization techniques. Architectures from VGG to ResNet illustrate how design choices influence efficiency and gradient flow, introducing bottleneck structures and residual connections. Beyond these foundations, performance tuning methods such as mixed precision and learning rate scheduling, together with attention mechanisms, extend CNNs into more efficient and context-aware models.

Transformer architecture expressed through clear computational logic.

Understanding how attention, normalization, and residuals interact gives engineers a concrete grasp of model behavior — making design choices, interpretability, and extensions grounded in actual mechanics.