Convolutional Neural Networks: Principles, Architectures, and Modern Extensions

Convolutional Neural Networks process data with grid structures such as images by applying convolutional filters, pooling layers, and normalization techniques. Architectures from VGG to ResNet illustrate how design choices influence efficiency and gradient flow, introducing bottleneck structures and residual connections. Beyond these foundations, performance tuning methods such as mixed precision and learning rate scheduling, together with attention mechanisms, extend CNNs into more efficient and context-aware models.

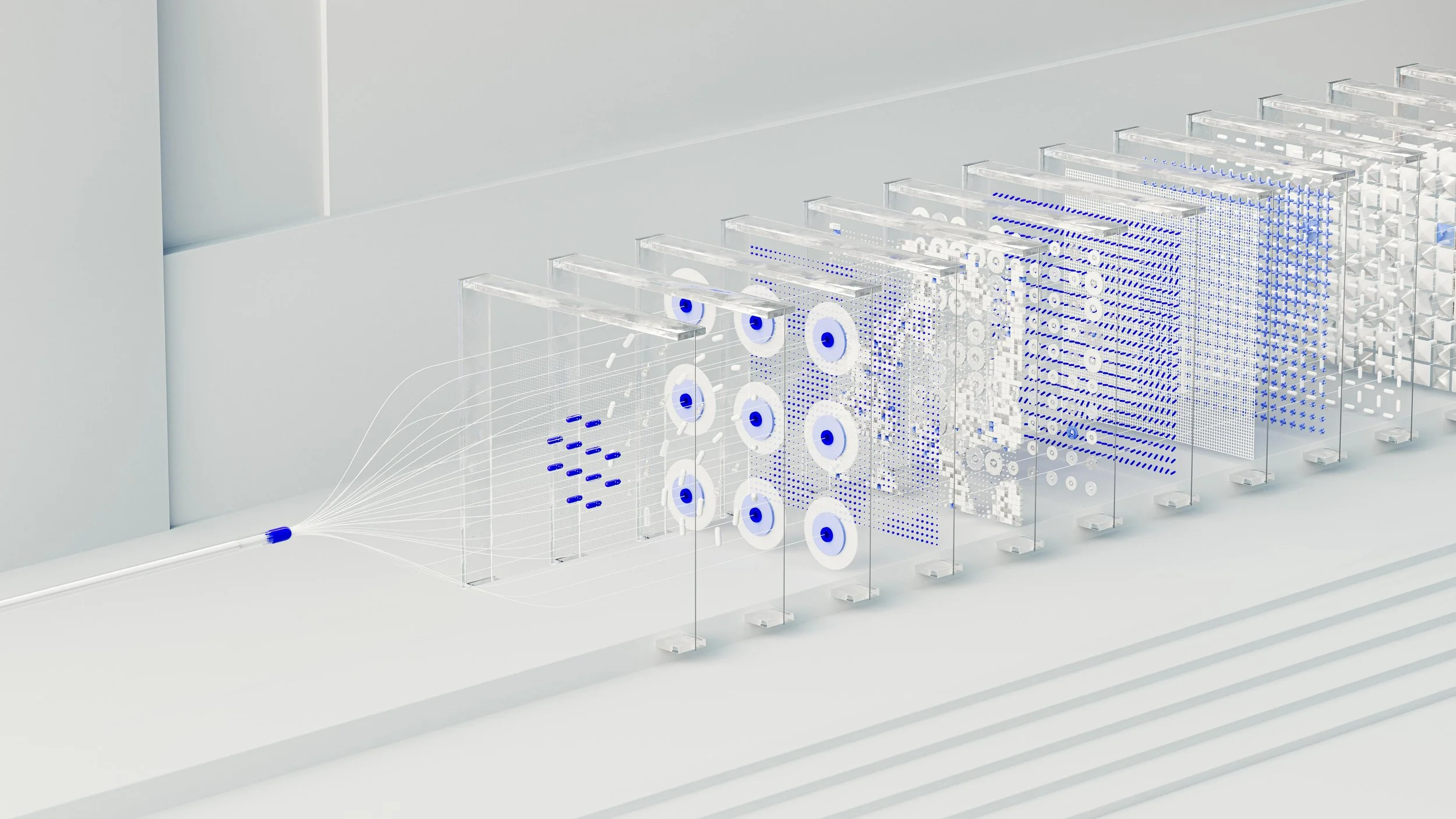

Transformers: Architecture, Operating Principles, and Application Examples

Transformer architecture expressed through clear computational logic.

Understanding how attention, normalization, and residuals interact gives engineers a concrete grasp of model behavior — making design choices, interpretability, and extensions grounded in actual mechanics.